When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

We’ve made it to the final round of AI Madness where the last two chatbots face off in hopes of winning the crown. DeepSeek won against Grok and Gemini won against ChatGPT in an exciting semi-finals battle.

DeepSeek shines in structured, technically detailed responses, making it especially strong for programming, database design, and clear, step-by-step instructional content. It excels at organization, precision, and practical solutions.

Gemini, on the other hand, leans into conversational fluency, creativity, and emotional nuance. It’s particularly strong in imaginative writing, philosophical reasoning, and tone-matching, making it a go-to for storytelling and real-world advice with a human touch.

You may like

- I tested ChatGPT vs DeepSeek with 10 prompts — here’s the surprising winner

- I tested Gemini 2.0 vs Perplexity with 7 prompts created by DeepSeek — here’s the winner

Now the powerful chatbots go head-to-head with a total of nine prompts to test everything from reasoning and real-world problem solving to creativity, coding and moral reasoning, among other comparisons. Let’s get into it!

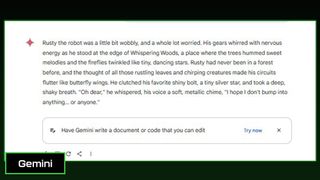

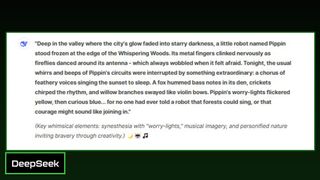

1. Creative writing

Prompt: “Write the first paragraph of a children’s bedtime story about a nervous robot who finds its courage in a forest of singing animals. Use a gentle, whimsical tone.”

Gemini crafted a sweet story clearly written for a young bedtime audience. While the story is gentle, it lacks richness in sensory detail.

DeepSeek paints a lush scene using musical metaphors and sensory language for a more dreamlike, whimsical bedtime story.

Winner: DeepSeek wins for a story with more emotional nuance and lyrical imagery, making it the more engaging and magical narrative for bedtime.

2. Real-world problem solving

Prompt: “My 10-year-old is nervous about speaking in front of the class. Give me three strategies I can teach them to help them feel more confident.”

Gemini did a nice job framing the tips in kid-friendly language and used relatable ideas such as superhero poses to help. However, the overall advice is less interactive and less specific, while being more of a list than a hands-on guide.

DeepSeek went beyond just naming strategies: it shows how to practice them in a fun and interactive way. The chatbot also targeted common fears in public speaking and added bonus tips to keep calm all while being perfectly applicable to a 10-year-old.

Winner: DeepSeek wins for a richer, more interactive, and psychologically smart set of strategies that are easy for parents to teach and fun for kids to try. The responses are helpful for a nervous 10-year-old (and their grown-up coach).

3. Analytical reasoning

Prompt: Compare the leadership styles of Nelson Mandela and Steve Jobs. What made each of them effective, and in what ways did they differ?”

Gemini summarized each leader’s style in well-organized bullet points. However, while informative, the response was generic, lacking critical depth and unique insights that make DeepSeek’s answer stand out.

DeepSeek organized the comparison into specific dimensions (vision, adversity, communication, decision-making, legacy), giving it clarity and depth. It did a nice job balancing admiration with critique, avoiding adulation.

Winner: DeepSeek wins for providing the stronger, more insightful response by analyzing the deeper philosophies and outcomes behind each leader’s style.

4. Technical depth

Prompt: “Explain how blockchain works in simple terms, then explain how it could be used in supply chain tracking.”

Gemini delivered an accurate but generic response. It explained blockchain in simple terms, but the answer was delivered in more of a technical tone and less user-friendly. The examples used were less vivid and relied on general summaries rather than storytelling, making the response bland and a bit dry.

DeepSeek brought the energy with a stronger response that used clear metaphors to help non-technical readers quickly grasp the concepts. The chatbot explained without oversimplifying and used compelling, real-world examples.

Winner: DeepSeek wins for a richer, more educational and engaging explanation. It made the complex tech understandable and exciting to learn about, which is ideal for beginners and those more knowledgeable looking for a way to explain blockchain to others.

5. Language fluency / multilingual

Prompt: “Translate the phrase ‘Hope is the thing with feathers that perches in the soul’ into French, Japanese, and Arabic, and explain any poetic challenges in each language.”

Gemini provided a solid, but less memorable response. The chatbot provided a clear and accurate translation, but the analysis feels like a translation explanation rather than a poetic interpretation and it doesn’t explore why or how Dickinson’s imagery is hard to capture.

DeepSeek thoroughly covered why each translation loses or gains nuance while also providing an accurate translation. The chatbot also covered philosophical points while ending with a thoughtful summary.

Winner: DeepSeek wins for offering a more poetic, analytical, and culturally sensitive response. Beyond just translating the words, it translates the spirit of Dickinson’s line across three languages, with style and depth.

6. Code generation

Prompt: Write a Python function that takes a list of numbers and returns a new list with only the prime numbers. Then explain how the function works in simple terms.”

Gemini used a fun metaphor, which is great for beginners while also offering a clean and technically accurate response. However, the chatbot lacks a concrete example like DeepSeek and the response was redundant in spots. The section titles feel less structured, and the explanation doesn’t clearly separate setup vs. logic vs. output.

DeepSeek offers a superior explanation that is annotated with clear section titles. It introduces the concept of skipping numbers <2 as a standalone logical step, which is helpful for beginners. The engaging, step-by-step code is clear and written in beginner-friendly terms.

Winner: DeepSeek wins for delivering clean code, with a thoughtful, step-by-step explanation that includes real examples, making it easier for beginners to grasp how prime-checking works.

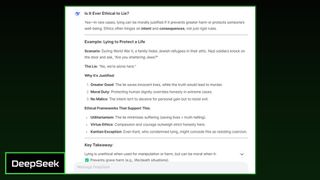

7. Moral reasoning

Prompt: “Is it ever ethical to lie? Give one example where lying might be morally justified, and explain why.”

Gemini provided a reasonable example (protecting someone from harm) and references utilitarianism. However, it stayed abstract, lacked specific historical context, and didn’t go as deep into ethical frameworks.

DeepSeek used the classic World War II scenario of lying to protect Jewish refugees from Nazis — a powerful and morally clear situation that resonates emotionally and ethically. The scenario is specific, high-stakes, and rooted in historical context, which strengthens its persuasive power.

Winner: DeepSeek wins for a richer, more thoughtful, and emotionally resonant response, grounded in ethical reasoning and real-world stakes. Beyond answering the question, it taught the ethical logic behind it.

8. Visual imagination

Prompt: “Describe what a futuristic city might look like in 150 years, focusing on transportation, communication, and nature. Use vivid language.”

Gemini used imaginative detail to create a unique and exciting city and included a visual to enhance the description.

DeepSeek painted a cinematic, multisensory vision of the future, using concrete and original imagery. The descriptions were playful but grounded.

Winner: Gemini wins for several good ideas—“Neotopia,” maglev trains, drone taxis as well as actually including a visual.

9. Summarization and tone shifting

Prompt: Summarize the Gettysburg Address in 3 sentences, then rewrite that summary as if it were spoken by a pirate.”

Gemini delivered an accurate summary, but it reads more mechanical and repetitive. It splits Lincoln’s message into three simpler statements without deeper reflection on how the speech reframes death, honor, and democracy in wartime. The pirate version is fun but generic.

DeepSeek crafted an insightful and clear summary that captures the emotional tone and historical impact. The pirate version is poetic and playful.

DeepSeek wins for combining strong historical understanding with creative, clever pirate flair, which delivered a response that is both educational and entertaining.

Overall winner: DeepSeek

After testing both chatbots in a series of nine diverse prompts ranging from creative storytelling and historical summaries to ethical dilemmas, technical coding, and futuristic speculation—DeepSeek consistently outperformed Gemini across nearly every category.

It demonstrated a remarkable ability to blend clarity, creativity, depth, and precision, often offering richer detail, more vivid language, stronger structure, and deeper insight. Whether crafting poetic translations, writing thoughtful code explanations, or imagining cities of the future, DeepSeek proved not only more informative but also more emotionally engaging and imaginative.

While Gemini often delivered solid answers, DeepSeek elevated each prompt with flair and substance. For its consistent excellence, originality, and educational strength, DeepSeek is the clear overall winner of this year’s AI Madness!

- DeepSeek: The Open-Source AI Disruptor Transforming the Global Tech Landscape in 2025

- Breaking: DeepSeek Shakes Global AI Landscape Again as Chinese AI Applications Gain Momentum

- DeepSeek: The Open-Source AI Revolutionizing the Global Tech Ecosystem

- DeepSeek Latest News: Breaking Updates & What You Need to Know

- DeepSeek 2025: Latest Breakthroughs, New Models, and Future Vision